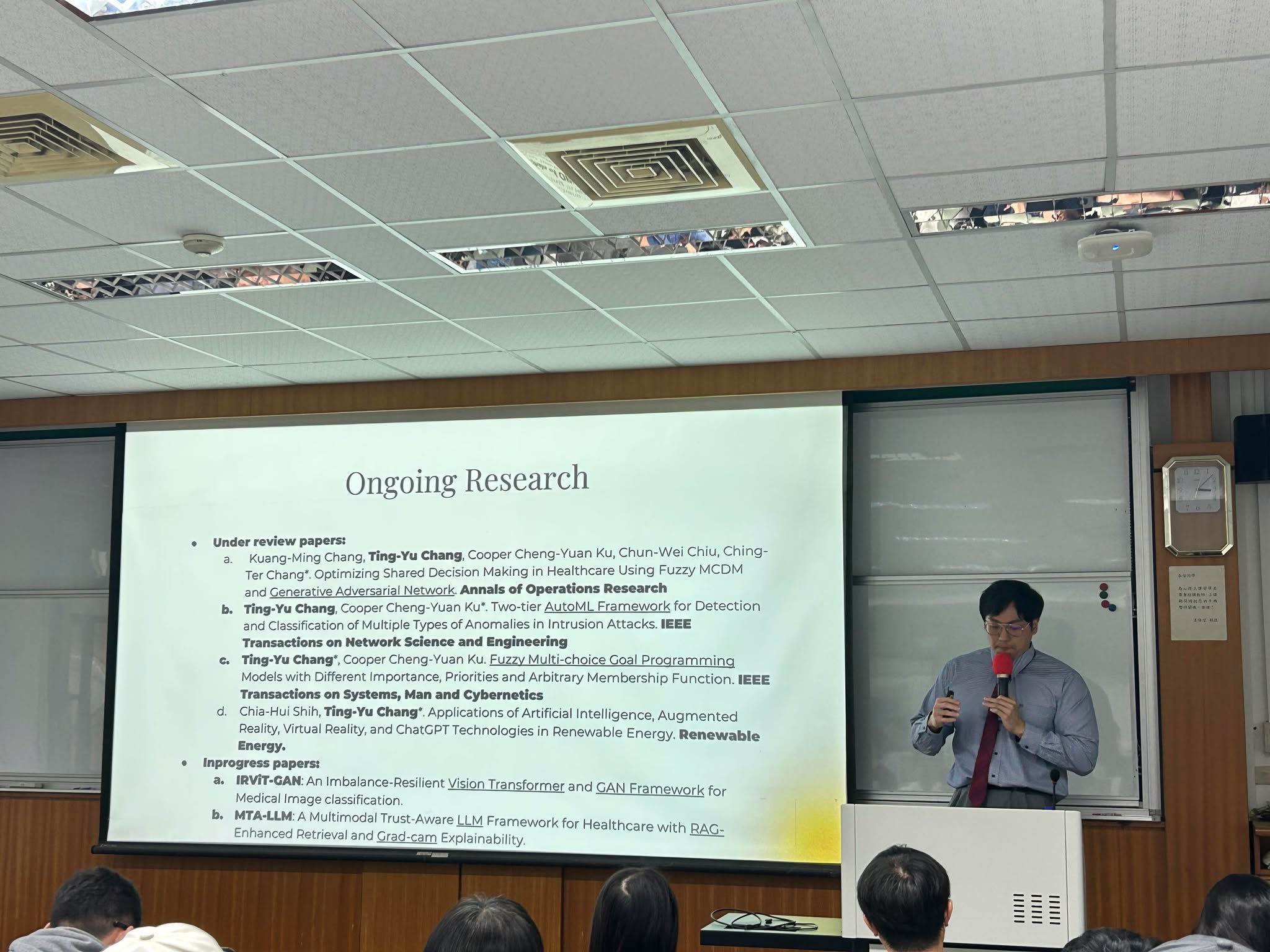

【專題演講】114/3/13(四) 15:00 – 16:00 Ting-Yu Chang

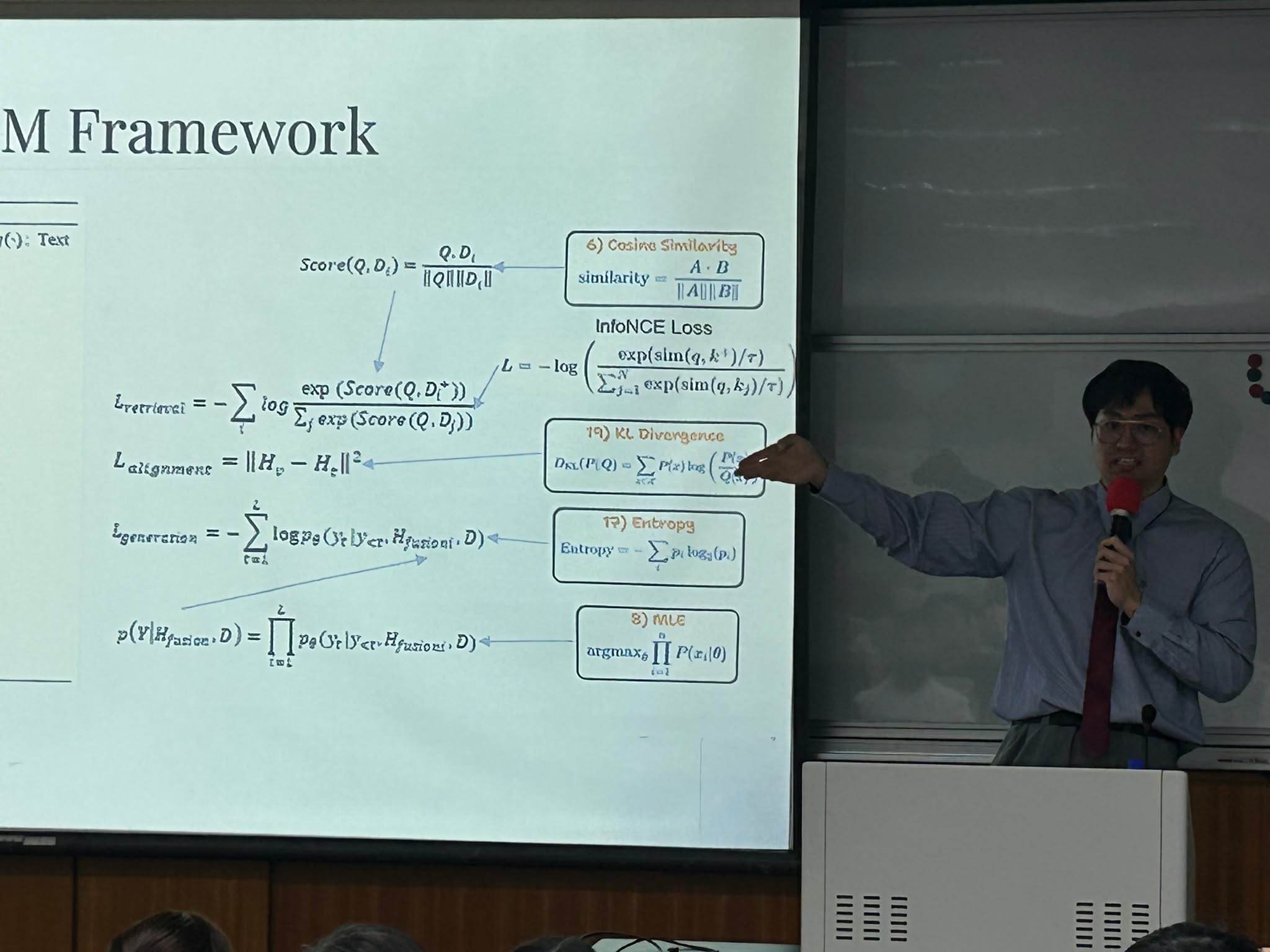

The topic of this presentation will introduce the current development and background of Multimodal Large Language Models (MLLMs) in the medical field. In recent years, with the emergence of models such as GPT4, MLLMs have demonstrated the potential to integrate imaging and textual information for medical applications. For instance, in radiology diagnostics, combining patient images (such as X-rays) with clinical text data is expected to provide more comprehensive diagnostic support.

However, MLLMs face several key challenges in medical applications,including the need for large-scale, high-quality training data, the complexity of integrating heterogeneous medical data (such as images and electronic health records), and the lack of interpretability in model decision-making processes. In particular, many current medical imaging analysis models produce results that are difficult to explain, leading to a lack of trust among clinicians and patients in AI models.

To enhance model transparency (by introducing Grad-CAM) and reliability (through RAG), and to foster user trust, addressing these issues has become a crucial prerequisite for the successful implementation of MLLMs in the medical field.